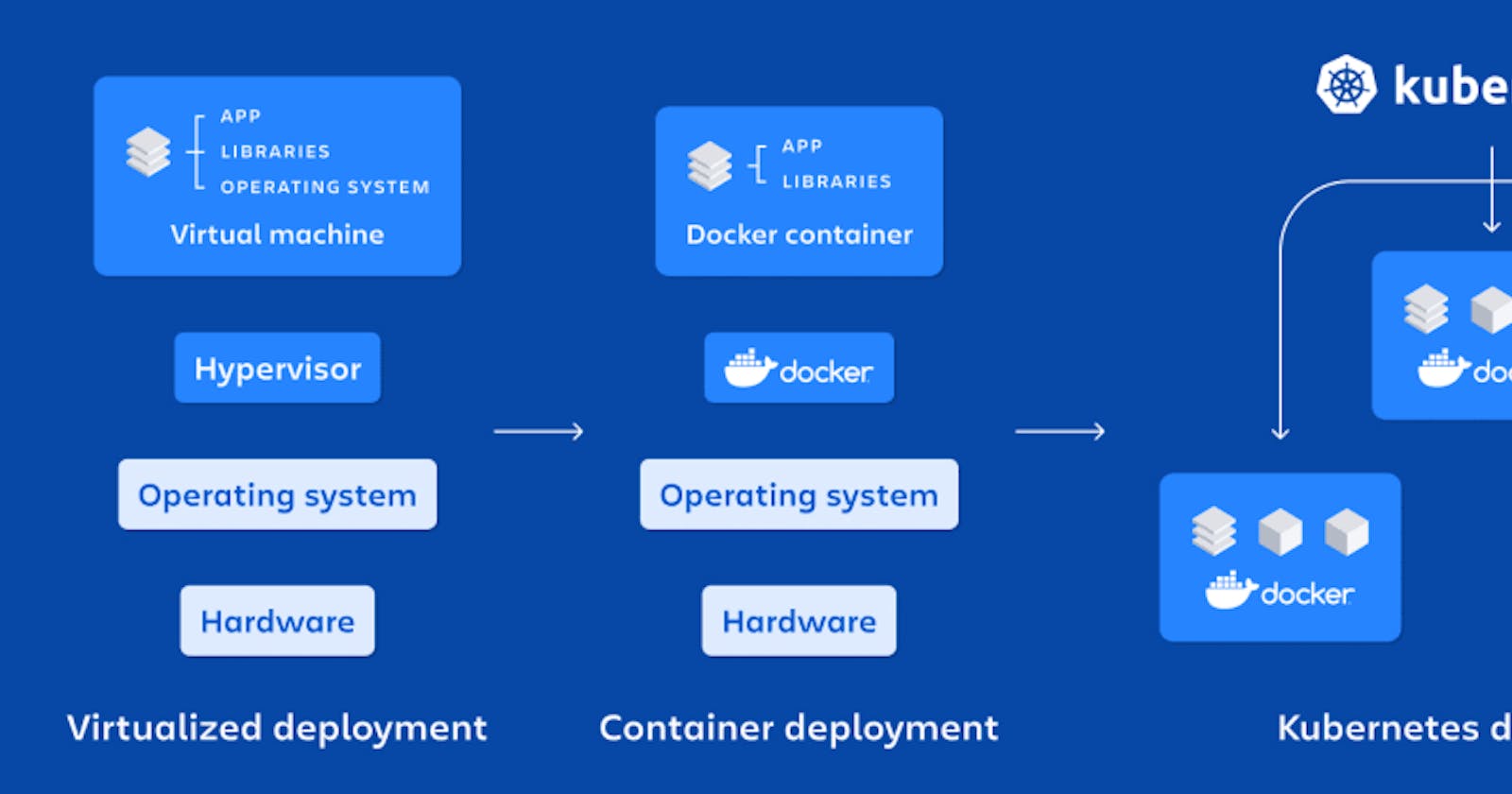

Docker and Kubernetes are both popular containerization technologies used for managing and deploying applications, but they serve different purposes.

Docker is a containerization platform that allows developers to package an application and its dependencies into a container, making it easier to deploy and run the application in any environment. Docker containers can run on any operating system, and they are lightweight and fast to deploy. Docker uses a client-server architecture and consists of a Docker daemon, a Docker CLI, and a Docker registry. The Docker daemon is responsible for building and running containers, while the Docker CLI allows users to interact with the daemon. The Docker registry is a centralized location where Docker images can be stored and shared.

Kubernetes, on the other hand, is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. Kubernetes allows users to deploy and manage containers across a cluster of nodes, ensuring that applications are always available and scalable. Kubernetes uses a declarative API to manage resources, which allows users to define their desired state and let Kubernetes handle the rest. Kubernetes also includes features such as service discovery, load balancing, and rolling updates, which makes it easier to manage and deploy applications at scale.

To understand the difference between Docker and Kubernetes, consider the following example:

Suppose you have a web application that consists of multiple microservices, each running in its own Docker container. You want to deploy this application on a cluster of servers, and you want to ensure that the application is always available and scalable.

Using Docker, you can package each microservice into a Docker container, and then use Docker Compose to define and manage the application stack. Docker Compose allows you to specify how the containers should be linked and configured, and it can deploy the entire application stack on a single server. However, if you want to deploy the application on multiple servers and ensure that it is always available, you need to use a tool like Kubernetes.

With Kubernetes, you can create a deployment object that defines the desired state of the application. The deployment object specifies the number of replicas of each microservice, the resource requirements, and other configuration details. Kubernetes then schedules the containers across the cluster of nodes and monitors their health to ensure that they are always available. If a container fails or becomes unresponsive, Kubernetes automatically replaces it with a new one, ensuring that the application remains available.

In summary, Docker is a containerization platform that makes it easier to package and deploy applications, while Kubernetes is a container orchestration platform that automates the deployment, scaling and management of containerized applications. Docker is a lower-level technology that is used to create containers, while Kubernetes is a higher-level technology that is used to manage and deploy containers at scale.

Let me demonstrate using a simple code:

Let's say we have a simple Node.js application that consists of a web server that serves a "Hello, World!" message on port 3000. We want to package this application into a Docker container and deploy it on a Kubernetes cluster.

First, let's create a Dockerfile that specifies the Docker image we want to build:

# Dockerfile

FROM node:14-alpine

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD [ "npm", "start" ]

This Dockerfile uses a Node.js base image and sets up the working directory, installs dependencies, copies the application files, exposes port 3000, and runs the npm start command to start the web server.

To build the Docker image, we can run the following command:

docker build -t my-app:1.0 .

This command builds the Docker image and tags it with the name my-app and version 1.0.

Kubernetes

Now, let's deploy the Docker container on a Kubernetes cluster. First, we need to create a Kubernetes deployment object that specifies the desired state of the application:

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app

image: my-app:1.0

ports:

- containerPort: 3000

This YAML file specifies a deployment object named my-app that creates three replicas of the application, and it uses the Docker image my-app:1.0. The YAML file also specifies that the application listens on port 3000.

To deploy the application on the Kubernetes cluster, we can run the following command:

kubectl apply -f deployment.yaml

This command creates the deployment object and schedules the containers across the cluster of nodes.

With Kubernetes, we can also use a service object to expose the application to the outside world:

# service.yaml

apiVersion: v1

kind: Service

metadata:

name: my-app

spec:

selector:

app: my-app

ports:

- name: http

protocol: TCP

port: 80

targetPort: 3000

type: LoadBalancer

This YAML file specifies a service object named my-app that exposes the application on port 80, and it uses a load balancer to distribute traffic across the replicas.

To create the service object, we can run the following command:

kubectl apply -f service.yaml

This command creates the service object and exposes the application to the outside world.

In summary, Docker allows us to package the application into a container, and Kubernetes allows us to deploy and manage the container at scale. With Docker, we can build the container image and run it locally, and with Kubernetes, we can deploy the container on a cluster of nodes and ensure that it is always available and scalable.